From 3 days to 5 minutes: How Docker Compose saves my client €150,000 a year

Management Summary

In modern software development, setting up development, test and acceptance environments costs an average of 2-3 days per team and release cycle. For a 7-person development team, this adds up to over €150,000 per year - pure waiting time, not added value.

With a well thought-out Docker Compose setup, this process can be reduced to less than 5 minutes. This article uses a real SaaS project to show how this works - technically sound, but pragmatically feasible.

Key Takeaways:

- ROI: 99% time saving during environment setup

- Infrastructure as code: Consistency from Dev to Production

- Field-tested setup for complex multi-service architectures

- Security hardening without complexity overhead

The Monday when nothing worked

It's 9:00 a.m., Monday morning. The development team is ready, sprint planning is complete, the user stories have been prioritized. Then the announcement: "We have to set up the new test environment before we can get started."

Anyone who works in software development knows what follows:

The first developer installs Postgres - version 16, because that is the latest version. The second sets up Redis - with different configuration parameters than in production. The third struggles with MinIO because the bucket names are not correct. And the DevOps engineer is desperately trying to get Traefik up and running while everyone else waits.

On Tuesday lunchtime, everything is finally up and running. Almost. The versions do not match, the configuration differs from production, and the next update starts all over again.

The bill is brutal:

- 7 developers × 16 hours × €80/hour = €8,960 for ONE environment

- × 3 environments (dev, test, acceptance) = €26,880

- × 6 release cycles per year = €161,280

And these are just the direct costs. Not included: Frustration, context switches, delayed releases.

The solution: Infrastructure as code - but the right way

"Why don't you just use Docker?" - A legitimate question, but it falls short. Docker alone does not solve the problem. Kubernetes? Overkill for most projects and often more complex than the original problem.

The answer lies in the middle: Docker Compose as "Infrastructure as Code Light".

With a well-structured Docker Compose setup, three days of setup hell becomes a single command:

git clone project-repo

docker compose --profile dev up -d5 minutes later: All services are running, consistently configured, with the correct versions.

Sounds too good to be true? Let's take a look at what it looks like in practice.

The stack: a real SaaS example

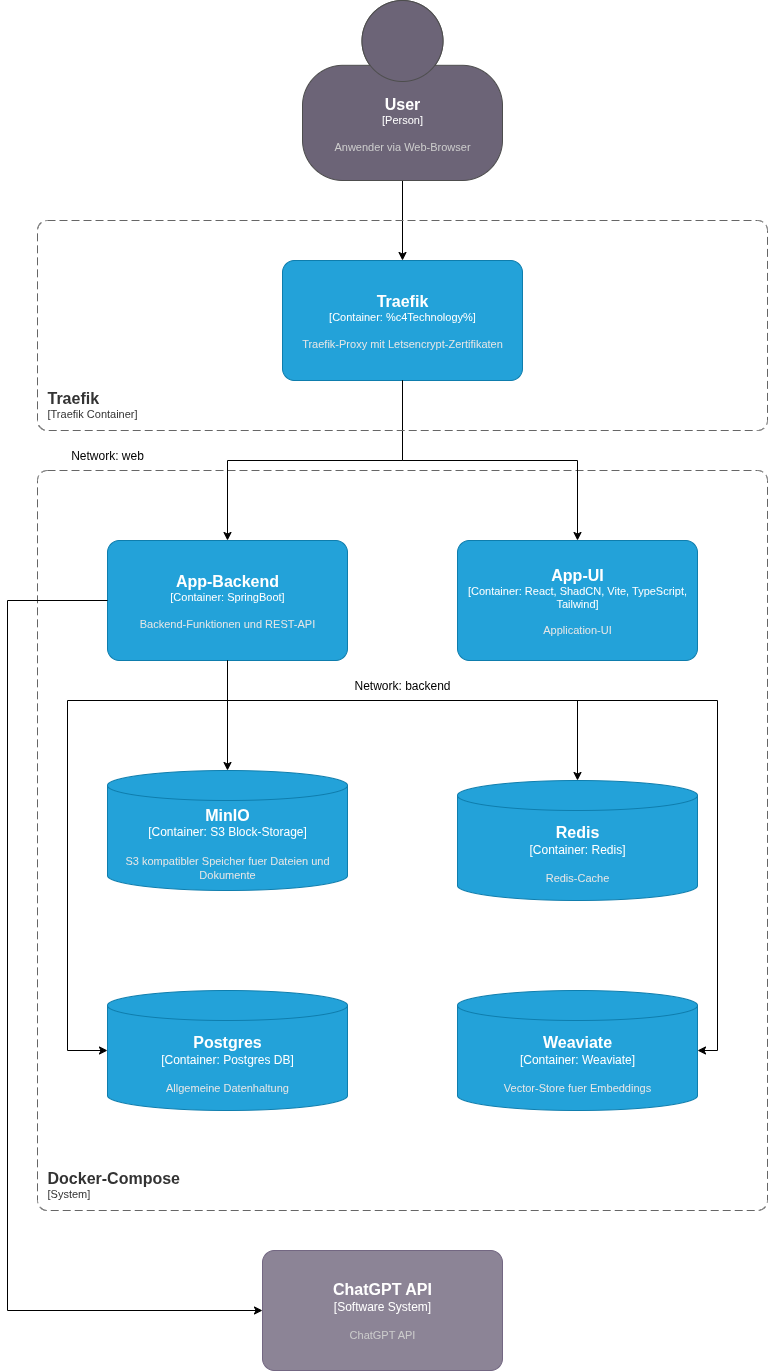

Our example comes from a real project - a modern SaaS application with typical microservice architecture:

Front-end tier:

- Traefik as a reverse proxy and SSL termination with Let's Encrypt

- React UI with Vite, TypeScript, ShadCN and TailwindCSS

Backend tier:

- Spring Boot Microservice as API layer

- PostgreSQL for relational data

- Weaviate Vector-DB for embeddings and semantic search

- Redis for caching and session management

- MinIO as S3-compatible object storage

- OpenAI API integration for AI-supported features

Eight services, three databases, AI integration, complex dependencies. Exactly the type of setup that normally takes days.

This is what it looks like in practice:

Illustration: All services run in Docker Compose with strict network separation between the web and backend layers.

The entire setup starts with a single command: docker compose up -d_

The secret: profiles and environment separation

The key lies in the intelligent use of Docker Compose Profiles. We distinguish between two modes:

Dev profile: Only backend services run in the container

- Frontend runs locally (hot reload, fast development)

- Databases and external services containerized

- Ports exposed for direct access with GUI tools

Prod profile: Full stack

- All services containerized

- Traefik routing with SSL

- Network isolation between web and backend

- Security hardening active

A simple export COMPOSE_PROFILES=dev decides which services start. No separate compose file, no duplication, no inconsistencies.

The underestimated game changers

Beyond the obvious advantages, there are three aspects that make the biggest difference in practice:

1. the "clean slate" at the touch of a button

Everyone knows the problem: the development environment has been running for weeks. Tests have been run, data has been changed manually, debugging sessions have left their mark. Suddenly the system behaves differently than expected - but is it the code or the "dirty" data?

With Docker Compose:

docker compose down -v

docker compose up -d30 seconds later: A completely fresh environment. No legacy issues, no artifacts, no "but it works for me". That's the difference between "let's give it a try" and "we know it works".

2. automatic database setup with Flyway

The database does not start empty. Flyway migrations run automatically at startup:

- Schema changes are imported

- Seed data for tests are loaded

- Versioning of the DB structure is part of the code

A new developer not only receives a running DB, but a DB in exactly the right state - including all migrations that have taken place in recent months.

3. adjust production errors - in 5 minutes

"The error only occurs with customers who are still using version 2.3." - Every developer's nightmare.

With Docker Compose:

export CV_APP_TAG=2.3.0

export POSTGRES_TAG=16

docker compose up -dThe exact production environment runs locally. Same versions, same configuration, same database migrations. The error is reproducible, debuggable, fixable.

No VM, no complex setup, no week of preparation. The support call comes in, 5 minutes later the developer is debugging in the customer's exact setup.

Traefik: More than just a reverse proxy

A detail that is often overlooked: Traefik runs in a separate container and is not part of the service stack definition. This has a decisive advantage.

In small to medium-sized production environments, this one Traefik container can serve as a load balancer for several applications:

# One traefik for all projects

traefik-container → cv-app-stack

→ crm-app-stack

→ analytics-app-stackInstead of configuring and maintaining three separate load balancers (or three nginx instances), a single Traefik manages all routing rules. SSL certificates? Let's Encrypt takes care of it automatically - for all domains.

The setup scales elegantly: from one project on one server to several stacks - without having to change the architecture.

Security: Pragmatic instead of dogmatic

"But aren't open database ports a security risk?" - Absolutely justified question.

The answer lies in the context: dev and test are not production.

In development and test environments:

- Ports are deliberately exposed for debugging

- Developers use GUI tools (DBeaver, RedisInsight, MinIO Console)

- The environments run in isolated, non-public networks

- Trade-off: developer productivity vs. theoretical risk

In production:

- Access only via VPN or bastion host

- Strict firewall rules

- Monitoring and alerting

- The same compose file, different environment variables

Here, the mistake is often made of securing dev environments like Fort Knox, while developers then struggle with SSH tunnels and port forwarding. The result: wasted time and frustrated teams.

The trick is to find the right trade-off.

Security hardening: where it counts

Where we make no compromises: Container security.

Every service runs with it:

Read-only file system: Prevents manipulation at runtime

Non-root user (UID 1001): Minimum privileges

Dropped Capabilities:

cap_drop: ALL- only what is really neededNo new privileges: Prevents privilege escalation

tmpfs for temporary data: Limited, volatile memory

cv-app: user: "1001:1001" read_only: true tmpfs: - /tmp:rw,noexec,nosuid,nodev,size=100m security_opt: - no-new-privileges:true cap_drop: - ALL

This is not overhead - it is a standard configuration that is set up once and then applies to all environments.

Network Isolation: Defense in Depth

Two separate Docker networks create clear boundaries:

Web-Network: Internet-facing

- Traefik Proxy

- UI service

- API gateway

Backend-Network: Internal only

- Databases (Postgres, Redis, Weaviate)

- Object Storage (MinIO)

- Internal services

A compromised container in the web network has no direct access to the databases. Defense in Depth - implemented in practice, not just on slides.

Environment variables: The underestimated problem

An often overlooked aspect: How do you manage configuration across different environments?

The bad solution: Passwords in the Git repository The complicated solution: Vault, secrets manager, complex toolchains The pragmatic solution: Stage-specific .env files, outside of Git

.env.dev # For local development

.env.test # For test environment

.env.staging # For acceptance

.env.prod # For production (stored in encrypted form)A simple source .env.dev before the docker compose up - ready.

For production: Secrets injected via CI/CD pipeline or stored encrypted with SOPS/Age. But not every environment needs enterprise-grade secrets management.

The workflow in practice

New developer in the team:

git clone project-repo

cp .env.example .env.dev

# Enter API keys

docker compose --profile dev up -d4 commands, 5 minutes - the developer is productive.

Deployment in Test:

ssh test-server

git pull

docker compose --profile prod pull

docker compose --profile prod up -d --force-recreateZero-downtime deployment? Health checks in the compose file take care of this:

healthcheck:

test: ["CMD-SHELL", "curl -f http://localhost:8080/actuator/health || exit 1"]

interval: 30s

timeout: 10s

retries: 3

start_period: 60sDocker waits until services are healthy before stopping old containers.

Lessons learned: What I would do differently

After several projects with this setup - some insights:

1. invest time in the initial compose file The first 2-3 days of setup will pay off a hundredfold over the course of the project. Every hour here saves days later.

2. health checks are not optional Without health checks, you may start services before the DB is ready. This leads to mysterious errors and debugging sessions.

3. version pinning is your friendpostgres:latest is convenient - until an update breaks everything. postgres:17 creates reproducibility.

4. document your trade-offs Why are ports open? Why this approach instead of that? You (and your team) will thank you in the future.

5. one compose file, multiple stages The attempt to maintain separate compose files for each environment ends in chaos. One file, controlled via Env variables and profiles.

The ROI: convincing figures

Back to our Monday morning scenario. With Docker Compose:

Before:

- Setup time: 16-24 hours per developer

- Inconsistencies between environments: frequent

- Debugging of environmental problems: several hours per week

- Costs per year: €160,000+

After:

- Setup time: 5 minutes

- Inconsistencies: virtually eliminated

- Debugging of environmental problems: rare

- Costs for initial setup: approx. 16 hours = €1,280

- Savings in the first year: €158,720

And that doesn't even take into account the soft factors: Less frustration, faster onboarding time, more time for features instead of infrastructure.

Who is it suitable for?

Docker Compose is not a Silver Bullet. It does not fit for:

- Highly scaled microservice architectures (100+ services)

- Multi-region deployments with complex routing

- Environments that require automatic scaling

It is perfect for:

- Start-ups and scale-ups up to 50 developers

- SaaS products with 5-20 services

- Agencies with several client projects

- Any team that wastes time with environment setup

The scaling path: from Compose to Kubernetes

An often underestimated advantage: Docker Compose is not a dead-end investment. If your project grows and needs real Kubernetes, migration is surprisingly easy.

Why? Because the container structure is already in place:

- Images are built and tested

- Environment variables are documented

- Network topology is defined

- Health checks are implemented

Tools such as Kompose automatically convert Compose files to Kubernetes manifests. The migration is not a rewrite, but a lift-and-shift.

The recommendation: Start with Compose. If you really need Kubernetes (not because it sounds cool at conferences, but because you have real problems), you have a solid basis for the migration. Until then: Keep it simple.

The first step

The question is not whether Docker Compose is the right tool. The question is: How much time is your team currently wasting on environment setup?

Do the math:

- Number of developers × hours for setup × hourly rate × number of setups per year

If the number is in four digits, the investment is worthwhile. If it's in five figures, you should have started yesterday.

About the author: Andre Jahn supports companies in optimizing their development processes and pragmatically implementing Infrastructure as Code. With over 20 years of experience in software development and DevOps, the focus is on solutions that actually work - not just on slides.